What is Amazon Kinesis Data Streams

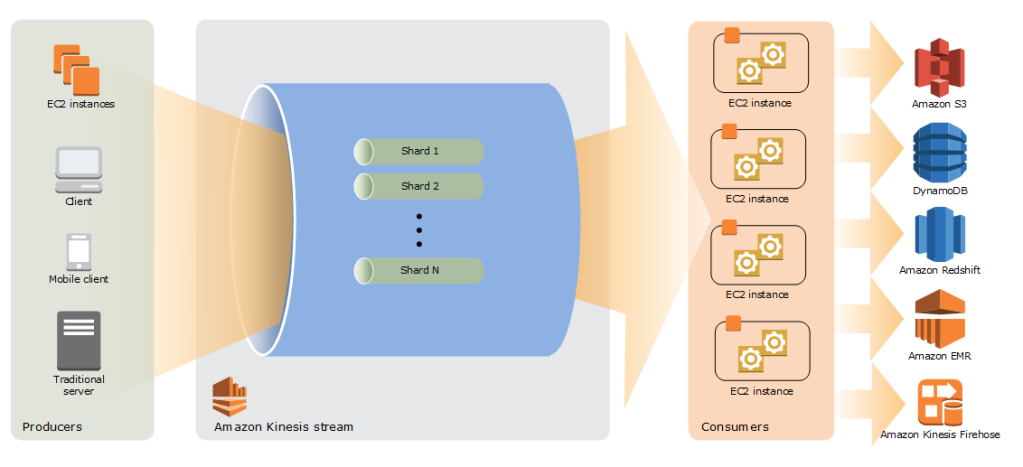

Amazon Kinesis Data Streams is a fully managed AWS service that you can use to collect and process large streams of data records in real time.

Key concepts:

- Data record – A unit of data that is stored by Kinesis Data Streams. Data records are composed of a sequence number, a partition key, and a data blob, which is an immutable sequence of bytes. Kinesis Data Streams does not inspect, interpret, or change the data in the blob in any way. A data blob can be up to 1 MB.

- Data stream – A resource that represents a group of data records.

- Shard – A uniquely identified sequence of data records in a stream. A data stream is composed of one or more shards. Each shard provides a fixed unit of capacity.

- Producer – A source that puts data into a Kinesis data stream.

- Consumer – An application that gets records from data streams and processes them.

- Kinesis Producer Library (KPL) – An easy-to-use, highly configurable library that helps you write to a data stream.

- Kinesis Client Library (KCL) – An easy-to-use, highly configurable library that helps you consume and process data from a data stream.

What happens if producer exceeds ingest values:

If your data producer exceeds either of those values, Amazon Kinesis Streams raises an exception, and your producer needs to retry records that did not get written successfully. Retrying failed records is a valid approach if the spike is very short-lived. However, to ingest more than 1000 records per second for a longer duration, you need to scale the number of shards in your stream. This can be achieved automatically by scaling the shards.

What is SQS

Amazon Simple Queue Service (Amazon SQS) offers a secure, durable, and available hosted queue that lets you integrate and decouple distributed software systems and components.

Queue types

| Standard queue | FIFO queue |

|---|---|

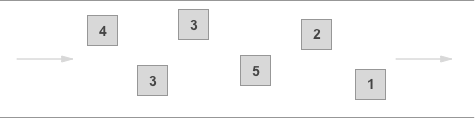

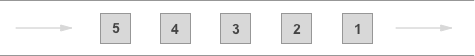

Unlimited Throughput – Standard queues support a nearly unlimited number of API calls per second, per API action (SendMessage, ReceiveMessage, or DeleteMessage).At-Least-Once Delivery – A message is delivered at least once, but occasionally more than one copy of a message is delivered.Best-Effort Ordering – Occasionally, messages are delivered in an order different from which they were sent. | High Throughput – If you use batching, FIFO queues support up to 3,000 transactions per second, per API method (SendMessageBatch, ReceiveMessage, or DeleteMessageBatch). The 3000 transactions represent 300 API calls, each with a batch of 10 messages. To request a quota increase, submit a support request. Without batching, FIFO queues support up to 300 API calls per second, per API method (SendMessage, ReceiveMessage, or DeleteMessage).Exactly-Once Processing – A message is delivered once and remains available until a consumer processes and deletes it. Duplicates aren’t introduced into the queue.First-In-First-Out Delivery – The order in which messages are sent and received is strictly preserved. |

|  |

| Send data between applications when the throughput is important, for example:Decouple live user requests from intensive background work: let users upload media while resizing or encoding it.Allocate tasks to multiple worker nodes: process a high number of credit card validation requests.Batch messages for future processing: schedule multiple entries to be added to a database. | Send data between applications when the order of events is important, for example:Make sure that user-entered commands are run in the right order.Display the correct product price by sending price modifications in the right order.Prevent a student from enrolling in a course before registering for an account. |

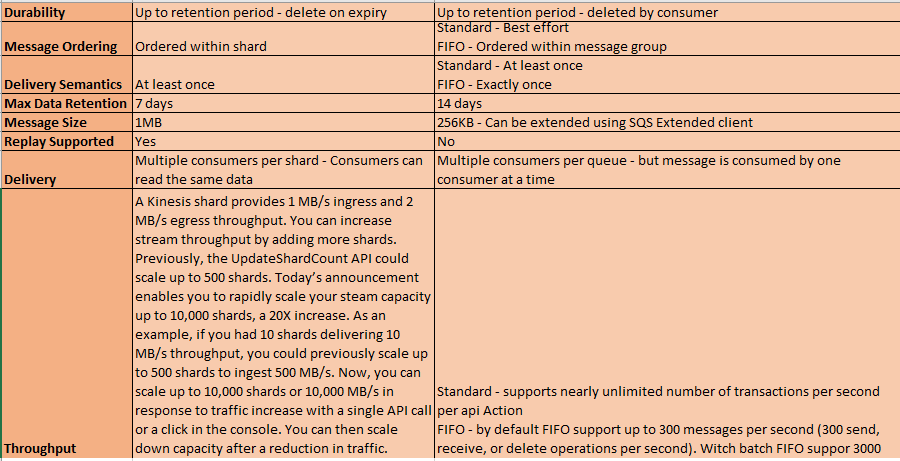

Kinesis vs SQS